Swapping strategy decks for an open notebook

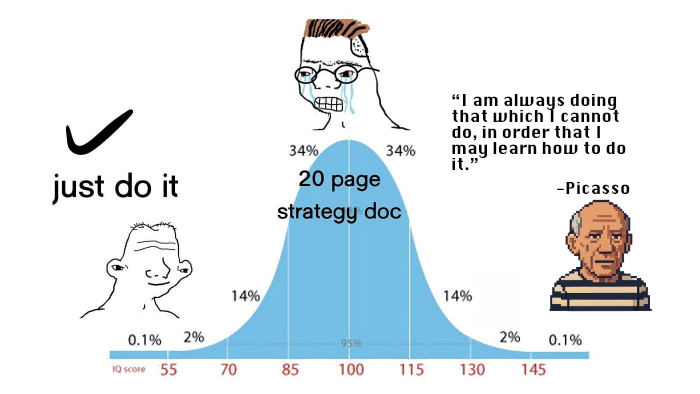

Every consultant knows the moment of truth, you're at the whiteboard trying to explain your brilliant strategy and suddenly realize you don't actually understand it. The act of explaining—whether through a slide deck, a diagram, or plain writing—ruthlessly exposes fuzzy thinking. That is what this blog is all about for me, not to get a million followers or showcase knowledge I already have, but to develop understanding I don't have.

From Bauhaus to backlogs and blank pages

My path to this realization wound through three seemingly disconnected disciplines. I started in industrial design, following my parents' creative footsteps; my mother in graphic design, my father in TV and film production. I loved the tangible satisfaction of taking an idea through sketching, prototyping, and finally holding a finished object. But in my final year, doing graphic and web design on the side, I discovered that same creative high could come from purely abstract computer code. One degree became two when I jumped straight into a Masters in computer science.

From there, I stumbled into tech consulting at Accenture, where PowerPoint decks and architecture diagrams became my primary creative output. After a few years of matching pithy action titles to insightful diagrams, I went back for an MBA at AGSM (with a stint at Chicago Booth), chasing my fascination with strategy. Years later, at Atlassian, I hit a culture shock, no decks, everything in Confluence. One of my most admired colleagues at Atlassian wrote an excellent internal blog post about communication and effective writing. While I referenced it countless times, I learned that the best teacher for skills like this is simply diving in and trying—nothing beats hands-on practice and iterative improvement.

My initial horror of abandoning my carefully crafted slides for walls of text taught me something crucial—whether it's a slide, a whiteboard diagram, or long-form prose, the medium doesn't matter. Clear thinking does.

Montaigne or midterms – Why writing forces real understanding

https://youtu.be/lML0ndFlBuc?si=0Pd-NtfquUONVO4g

A great explainer of this concept of using writing as a tool for learning is this short YouTube video by a guy called Giles McMullen. In it he explains how essay writing is basically a stealth learning technique disguised as homework - the kind of thing that sounds boring until you realize it's forcing your brain to do all the heavy lifting that actually makes knowledge stick. He traces this back to a French philosopher in the 1500s who basically invented the essay form not to show off his ideas but to figure out what his ideas actually were.

When you write an essay, you can't just passively absorb information as you do when reading; you're forced to retrieve facts from memory, analyse arguments, evaluate evidence, and create something original; basically running through every level of cognitive development that education researchers have identified as essential for deep learning. The beautiful thing is this works for any subject because it's not really about writing, it's about thinking clearly, and unclear thinking becomes impossible to hide when you're staring at a blank page trying to explain why a company should change its pricing model, how AI works or why you decided to spend time on writing a blog no-one will ever read!

Because Skynet won’t explain itself – and neither will GPT

I use AI every day now. As a sounding board when I'm shaping strategy narratives, as a research assistant when I'm juggling five projects, even to sanity-check ideas before they hit the whiteboard. But here's what bothers me: I have no idea how any of it actually works.

This isn't just intellectual curiosity. In consulting, fuzzy thinking dies the moment you try to explain it to a client. Yet here I am, building workflows around systems that are essentially black boxes. I can prompt them, direct them, even get surprisingly good results from them—but I couldn't explain their decision-making process if my career depended on it.

Twenty years ago, when neural networks were a dry computer science topic that put half my classmates to sleep, this wouldn't have mattered. Back then, machine learning was the domain of a few comp-sci academics, and the sexiest algorithm we studied was simulated annealing. We never imagined these concepts would become the foundation of billion-dollar companies like OpenAI and Anthropic.

But now, sitting in 2025 and watching AI development accelerate, I'm struck by the uncomfortable realisation that I might understand less about these systems now than I did when they were just theory in a textbook. At least then, I could trace through the mathematics step by step.

Why I think I need to understand AI much better

The gap between surface knowledge and deep understanding becomes obvious with AI. Take this viral LinkedIn post by Ken Cheng, who jokes about defeating AI by inserting nonsense: 'waffle iron 40% off... Strawberry mango Forklift.' It's comedy, but it reveals something serious—most of us, including Cheng, don't actually understand how large language models work. We grasp just enough to make jokes or feel threatened, but not enough to engage meaningfully with the technology reshaping our world.

That's why I want to start with AI—not because I harbour illusions of becoming the next Benedict Evans, but because I'm tired of operating in that dangerous zone of "I get it in my head" without actually getting it at all. If we're going to build our professional lives around these tools, we need to understand what's happening under the hood.

Even if the hood is welded shut.

"—"

Let’s clear this up: does every sentence with an em dash mean it was written by AI? Hardly. Anyone who’s ever glanced at typesetting knows the difference between a hyphen “-”, an en dash “–”, and the mighty em dash “—”. I’ll admit I never touch the en dash (life’s too short), but the em dash just looks too clean not to use—so if that makes me sound like a bot, fine.

A challenge

Using a tool isn’t the same as understanding it. So I’m giving myself a challenge: twenty explainers on AI. Some will be theory—gradient descent, embeddings, transformers—others will be experiments that may or may not crash spectacularly. Let's see how it all goes!

No spam, no sharing to third party. Only you and me.

Member discussion