What Even Is AI? And Why the Answer Matters More Than You Think

AI has ruined science fiction. At least for me. I remember watching Star Wars as a kid and willingly suspending disbelief: rusty robots that felt like clumsy metal people, C-3PO fluent in millions of languages, a walking stack of memory and social awkwardness but gloriously useless at solving problems.

Our culture is so suffused with talk of AI, equal parts apocalypse and salvation — that those uncomplicated machines no longer look like a believable fantasy. You can’t avoid hearing about Kurzweil’s The Singularity Is Near or the endless debates about AI safety where we project on them every possible future: the promise of miracle cures, or a single machine that out-thinks us all.

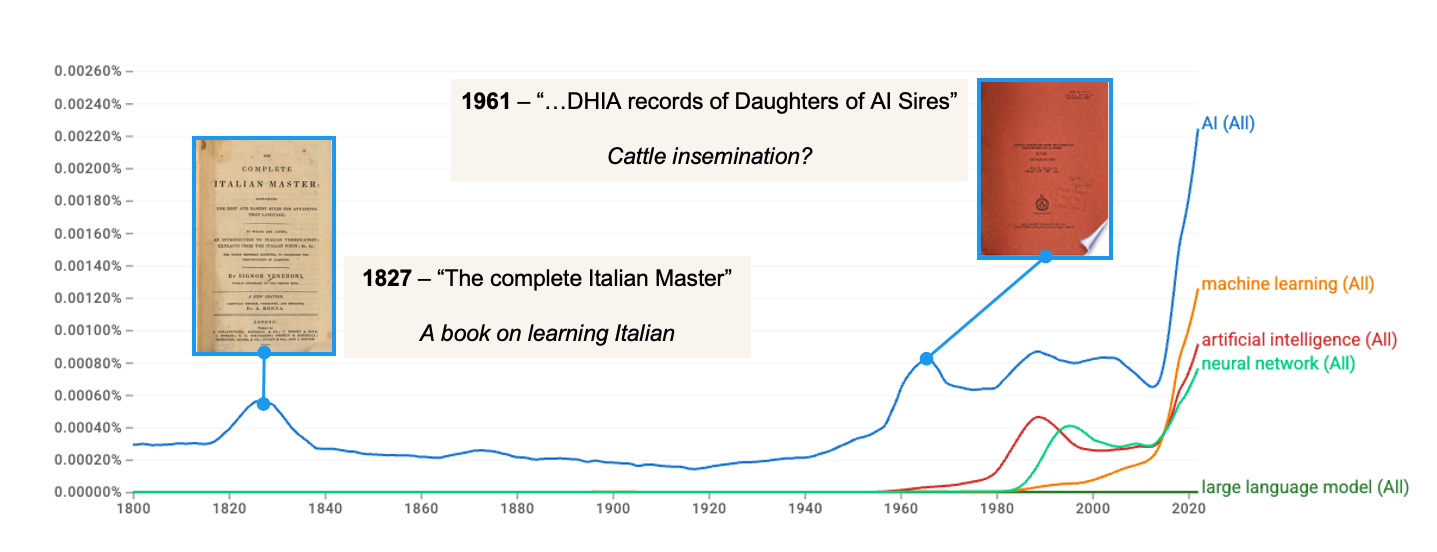

Despite how surrounded we now are by AI it has only exploded into our collective consciousness relatively recently. See the Google Ngram chart below showing the prevalence of some key terms relating to AI (AI, artificial intelligence, large language model, machine learning, neural network) over the past ~220 years.

Unsurprisingly, those terms barely appear for most of the record — apart from a couple of quirky false starts in the 19th and mid-20th centuries. Sadly the analysis ends in 2022, but one can only guess how much more those terms have shot up in the meantime.

These days people are quick to equate AI with the LLM based chatbots such as Claude or ChatGPT that have become so ubiquitous. We have forgotten about earlier advances such as Deep Blue, the first computer to beat a chess grandmaster in 1996, or AlphaFold which has revolutionised our understanding of protein folding by predicting final protein structures from amino acid sequences. Not to mention even earlier tries, but that is for a later post on AI history.

From Rules to Shoggoths: Two Paths to AI

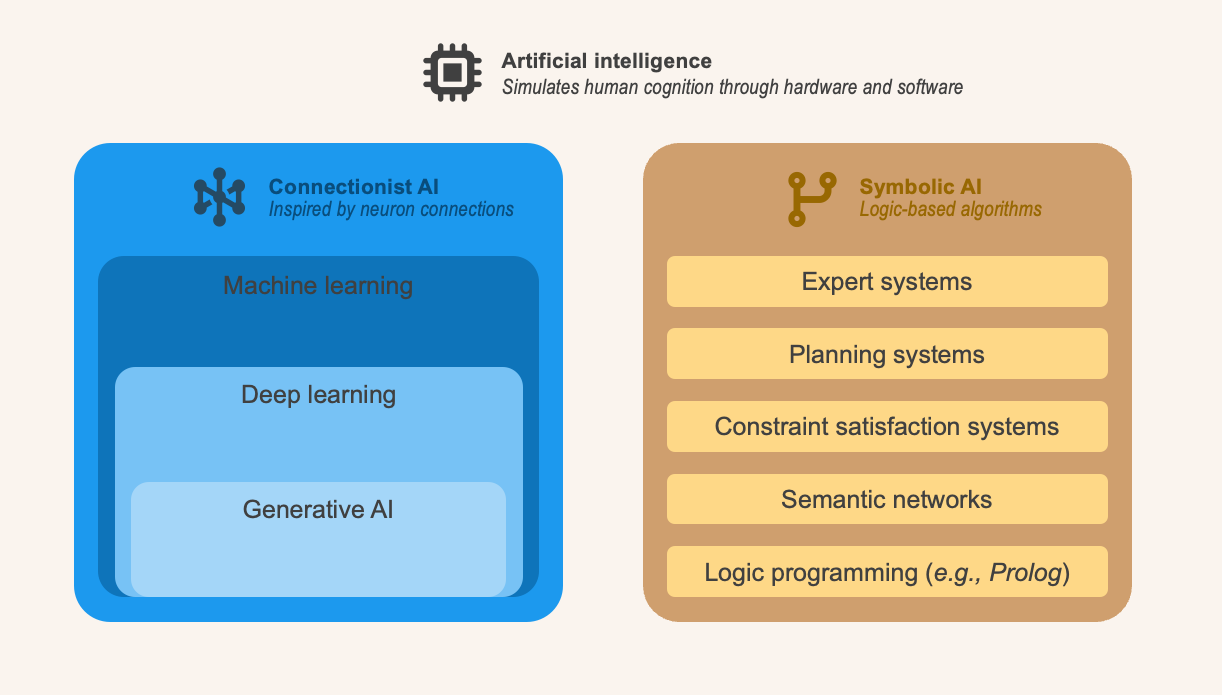

So what is Deep Blue and how is it related to the LLMs we are more familiar with today? AI has always been a story of two tribes. On one side, Symbolic AI—the rule-writers. They believed you could capture intelligence by listing out every “if-then” in the world. Expert systems, planning algorithms, Prolog—think of them as obsessive spreadsheet builders who try to model reality cell by cell. Impressive in theory, brittle in practice. Miss a rule and the whole thing crumbles.

The other tribe is Connectionist AI. Instead of writing rules, they throw data at artificial neurons and let the system figure out patterns, essentially growing a system using mountains of data and GPUs. Machine learning, deep learning, and today’s generative models all live here. It’s messy, it’s statistical, but it turns out to scale in a way that hand-coded rules never did.

One camp starts from the top down (logic, rules, definitions), the other from the bottom up (patterns, data, probability).

Shoggoth — The inscrutable matrix of millions of numbers

Our blog parrot in the hero image is looking unhappily at a little "Shoggoth", but what is that? In AI circles, “Shoggoth” is a metaphor drawn from H. P. Lovecraft’s At the Mountains of Madness to describe large language models (LLMs) and other advanced AI systems as formless, alien, powerful entities whose inner workings are mysterious and not fully understood.

The meme has been around for a while but in 2022 was used to represent “GPT-3” and “GPT-3 + RLHF (reinforcement learning from human feedback)” — one being a “raw” Shoggoth, the other with a small smiley-face mask. The mask symbolises how developers try to make the AI behave in human-friendly ways, even though the core remains inscrutable.

The Long Road from Spam Filters to ChatGPT

If you zoom into connectionist AI, you can think of the categories as concentric layers, each one a deeper cut into how machines learn.

The outer layer is machine learning: algorithms that pick up patterns from data rather than following hand-coded rules. A spam filter that trains itself on past emails is a simple example—it learns correlations and then generalises.

Inside that sits deep learning. Here the algorithms use stacked neural networks with many layers, which makes them capable of handling far more complex data like images, speech, or natural language. Deep learning is what powers image recognition on your phone, voice assistants like Siri, or real-time translation tools.

At the very core is generative AI. These models don’t just recognise—they create. Large language models such as GPT generate fluent text, Midjourney spins up original images, and music models compose new tracks. It’s the same statistical machinery, but instead of labelling the world, it starts producing new artifacts that look convincingly human-made.

Each inner circle relies on the outer one. Generative AI is possible because of deep learning, which in turn is a specialised form of machine learning. The deeper you go, the more surprising—and, to many of us, magical—the outputs start to feel.

Symbolic AI and connectionist AI may look like rival camps, but together they illustrate AI’s evolving story: from brittle rule-based systems to adaptive pattern-recognisers to generative models that can create. As technology advances, our definition of “intelligence” keeps expanding, and tools we once marvelled at become mundane. The next breakthroughs—perhaps systems that blend symbolic reasoning with generative fluency—will again reset our expectations of what machines can do.

The joy of hard problems

The dictionary definition of AI is usually something like “the simulation of human intelligence in machines.” To me that misses the point. I prefer to think of AI as a tool—something that amplifies human thinking, helping us tackle problems that would be too complex or time-consuming for our minds alone.

That framing matters, because not all tools change us in the same way. Take an abacus versus a calculator. Both make arithmetic easier. But the abacus is a kind of “mind gym”—the more you use it, the stronger your mental arithmetic gets. The calculator, by contrast, takes the skill out of your head and puts it in silicon. The more you use it, the less you practise, and your own capacity atrophies.

That’s the tension with AI. If we let machines solve problems for us, we risk losing the growth, meaning, and even joy that comes from solving things ourselves. The fastest person alive already exists—we can watch them at the Olympics. But most of us still run, not because we’ll ever win gold, but because the act of striving is good for our health and our soul. Likewise, IKEA makes furniture problems “cheaply solved.” Yet many of us (myself included) still take pleasure in the harder path: building our own, carving wood, shaping something unique with our hands. It isn’t efficient, but it is deeply human.

So what happens if we one day create “the last invention”, an Artificial General Intelligence or even superintelligence that can solve any problem we throw at it? As statistician I. J. Good warned back in 1965, the first ultraintelligent machine might also be the last invention humanity needs to make. That sounds like the end of the story. But it might also be the beginning of a new one, where the real challenge isn’t what problems AI can solve, but what problems we choose to solve ourselves.

That’s why we need not just technical guardrails but cultural norms: ways of weaving AI into our lives so it enhances rather than hollows out what it means to be human. As The Free Press recently argued in “How to Survive Artificial Intelligence,” the real test of our age is whether we can maintain meaning and relevance when we are no longer the smartest entities in the room.

AI may be the last invention. But whether it becomes our crutch or our catalyst—that choice is still ours. If we can work out norms that nudge our use of AI toward augmentation rather than replacement, we’ll keep the joy of hard problems alive. If not, the time we save will cost us the meaning we lose.

Some useful definitions

RAG - Stands for Retrieval Augmented Generation. In its most simple form is a technique that enhances the output for large language models by finding relevant information (Retrieve), adding it to the AI's knowledge (Augment), and creating a better response for the the user (Generate).

Inference - This is essentially what is happening when you ask a question of an LLM. It is using what it has learned during its training run to analyse input data, generate new content, make decisions, or make predictions.

RLHF - Stands for Reinforcement Learning from Human Feedback. It is a technique for aligning AI models by training them with human preference judgments, first fine-tuning a model on human-ranked outputs, then using reinforcement learning to make the model generate responses that better match what people consider helpful, safe, or appropriate.

...and some links for further reading

- https://www.forbes.com/sites/robtoews/2019/11/17/to-understand-the-future-of-ai-study-its-past/

- https://www.icaew.com/insights/viewpoints-on-the-news/2024/nov-2024/types-of-ai-how-are-they-classified

- https://www.icaew.com/insights/viewpoints-on-the-news/2024/nov-2024/types-of-ai-how-are-they-classified

No spam, no sharing to third party. Only you and me.

Member discussion